Introduction

“Everything’s Computer!”: For a New Speculative Philosophy of Computation (Which is to Say, of Life, Intelligence, Automation, and the Compositional Evolution of Planets)

Can we create the Philosophy of the 21st century before it is too late? Can the dizzying implications of planetary computation as an increasingly complexifying epistemological, scientific, and geopolitical reality.

Artificial intelligence; neuromorphic computation, quantum computing, biochemical computing; large scale scientific simulations, chip wars, financial digitalization, both centralized and decentralized; interlocking ID systems, infrastructural electrification, the global intensification of discontiguous chains of automation; and perhaps most importantly, informational theories of biology that narrativize the scaffolding cascades of complexity we call “life”, planetary intelligence, orienting its own precarious future.

Sciences are born when philosophy learns to ask the right questions; their potential is suppressed when it does not. New philosophy is born when new technologies force it to invent new concepts. Today, the relationship between the humanities and science is one of critical suspicion, a state of affairs that frustrates the development of not only philosophy but also new sciences to come.

This means not just applying concepts but also inventing them. While this puts our work in a slightly heretical position in relation to the current orientations of the humanities, it is well placed to develop the school of thought for the speculative philosophy of computation that will frame new and fertile lines of inquiry for a future in which science, technology, and philosophy convene within whatever supersedes the humanities as we know it.

Lunar Orbiter 1

There are historical moments in which humanity’s speculative imagination far outpaces its technological capacities. Those times overflow with utopias. At others, however, “our” technologies’ capabilities and implications outpace the concepts we have to describe them, let alone guide them. The present is more the latter than the former. At this moment, technology—and particularly planetary-scale computation—has outpaced our theory. We face something like a civilization-scale computational overhang. Human agency exceeds human wisdom. For philosophy, it should be a time of invention.

Too often, however, the response is to force comfortable and settled ideas about ethics, scale, polity, and meaning onto a situation that not only calls for a different framework but is already generating a different framework.

The response goes well beyond applying inherited philosophical concepts to the present day. The goal, as we joke, is not to ask, “What would Kant make of driverless cars?”, “What would Heidegger lament about large language models?” but rather to allow for the appearance and cultivation of a new school of philosophical/technological thought that can both account for the qualitative implications of what is here and now and contribute to that thought’s compositional orientation. The present alternatives are steeped in sluggish scholasticism: Asking if AI can genuinely “think” according to the standards set forth by Kant in Critique of Pure Reason is like asking if this creature discovered in the New World is actually an “animal” as defined by Aristotle. It’s obvious the real question is how the new evidence must update the category, not how the received category can judge reality.

A better way to “do philosophy” is to actively experiment with the technologies that make contemporary thought possible and to explore the fullest space of that potential. Instead of simply applying philosophy to the topic of computation, Antikythera starts from the other direction and produces theoretical and practical conceptual tools—the speculative—from living computational media. In the twenty-first century, the instrumental and existential implications of planetary computation challenge how planetary intelligence comes to comprehend its own evolution, its predicament, and its possible futures, both bright and dark.

Computation is born of cosmology

The closely bound relationship between computation and planetarity is not new. It begins with the very first artificial computers (we hold that computation was discovered as much as it was invented, and that the computational technologies humans produce are artifacts that make use of a natural phenomenon).

Antikythera takes its name from the Antikythera mechanism, first discovered in 1901 in a shipwreck off the Greek island of the same name and dated to 200 BCE. This primordial computer was more than a calculator; it was an astronomical machine—mapping, tracking, and predicting the movements of stars and planets, marking annual events, and guiding its users on the surface of the globe. The mechanism not only calculated interlocking variables but also provided an orientation of thought in relation to the astronomic predicament of its users. Using the mechanism enabled its users to think and act in relation to what was revealed through the mechanism’s perspective.

This is an augmentation of intelligence, but intelligence is not just something that a particular species or machine can do. In the long term, it evolves through the scaffolding interactions between multiple systems: genetic, biological, technological, linguistic, and more. Intelligence is a planetary phenomenon.

The name Antikythera refers more generally to computational technology that discloses and accelerates the planetary condition of intelligence. More than one particular mechanism, it is a growing genealogy of technologies, some of which—like planetary computation infrastructures—we not only use but also inhabit.

Computation is calculation as world ordering; it is a medium for the complexification of social intelligence.

Computation takes the form of planetary infrastructure that remakes philosophy, science, and society in its image.

How does Antikythera define computation? For Turing, it was a process defined by a mathematical limit of the incalculable, but as the decades since his foundational papers have shown, there is little in life that cannot be modeled and represented computationally. This process, like all models, is reductive. A map reduces territory to an image, but that is how it becomes useful as a navigational tool. Similarly, computational models and simulations synthesize data in ways that demonstrate forms and patterns that would be otherwise inconceivable.

As a rules-based, output-generating operation, computation has general and specific definitions, including biological analogical processing of very local information and Universal Turing machines, general recursive functions, and the defined calculations of almost anything at all.

Antikythera presumes that computation was discovered as much as it was invented. It is not so much that natural computation works like modern computing devices but rather that modern computing devices and formulations are quickly evolving approximations of natural computation—genetic, molecular, neuronal, etc.

Computation as a principle may be near universal, but computation as a societal medium is highly malleable. Its everyday affordances are seemingly endless. However, computational technologies evolve, and societies evolve in turn. For example, in the decades to come, what is called “AI” may no longer be simply a novel application for computation but its primary societal-scale form. Computation would be not just an instrumentally focused calculation but the basis of widespread non-biological intelligence.

Through computational models, we perceive existential truths about a great many things: human genomic drift through history, the visual profile of astronomic objects millions of light-years away, the extent of anthropogenic agency and its climatic effects, the neurological foundations of thought itself… The qualitative profundity of these begins with a quantitative representation. The math discloses reality, and reality demands new philosophical scrutiny.

Allocentrism in philosophy and engineering

Computation is no more a tool than language is. Both language and computation are constitutive of thought and the encoding and communication of symbolic reasoning. Both evolve in relation to how they affect and are affected by the world, and yet both retain something formally unique. That machine intelligence would evolve through language as much as language will in the foreseeable future evolve through machines suggests a sort of artificial convergent evolution. More on that below.

What does Antikythera mean by “computation” and what is its slice of that spectrum? Our approach is slightly off kilter from how the philosophy of computation is, at present, usually practiced. Philosophy, in its analytic mode, interrogates the mathematical procedure of computation and seeks to qualify those formal properties. It bridges logic and the philosophy of mathematics in often exquisitely productive but sometimes arid ways. Our focus, however, is less on this formal uniqueness than on the mutually influential evolution of computation and the world, and how each becomes a scaffold for the development of the other.

For its part, continental philosophy is suspicious and dismissive of, and even hostile to, computation as a figure of power, reductive thought, and instrumental rationality. It devotes considerable time to the often obscure, prosy criticism of all that it imagines computation to be and do. As a rule, both spoken and unspoken, it valorizes the incomputable over the computable, the ineffable over the effable, the analog over the digital, the poetic over the explanatory, and so on.

Our approach is also qualitative and speculative, but instead of seeing philosophy as a form of resistance to computation, we see computation as a challenge to thought itself. Computation is not that which obscures our view of the real but that which has, over the past century, been the primary enabler of confrontations with the real. These confrontations are sometimes startling and even disturbing but always precious. This makes us neither optimists nor pessimists, but rather deeply curious and committed to building and rebuilding from first principles rather than commentary on passing epiphenomena.

Artificial life simulation by Alexander Mordvintsev

Our philosophical standpoint is allocentric more than egocentric, “Copernican” more than phenomenological. The presumption is that we will, as always, learn more about ourselves by getting outside our own heads and perspectives, almost always through technological mediation, than we will by private rumination on the experience of interiority or by mistaking culture for history. That said, even from an outside perspective looking back on ourselves, we (“humans”) are not necessarily the most important thing for philosophy to examine. The vistas are open.

Most sciences grew out of philosophy and did so by stepping outdoors and playfully experimenting with the world as it is. Instead of science composing new technologies to verify its curiosity, the inverse is perhaps even more often the case: New technologies devised for one purpose end up changing what is perceivable and thus what is hypothesized; and thus, they change what science seeks. The allocentric turn does not imply that human sapience is not magnificent, but it does locate it differently than it may be used to. It is true that Homo sapiens is the species that wrote this and is presumably reading this (the most important reader may be a future LLM), but we are merely the present seat of the intensification of abstract intelligence, which is the real force and actor. We are the medium, not the message. If Antikythera might eventually contribute to the philosophical explorations of what in due time becomes a science of understanding the relationship between intelligence and technology (and life) as intertwined planetary phenomena—to ask the questions that can only be answered by that—then we will have truly succeeded.

Planetary Computation

The Anthropocene Is a Second-Order Concept Derived from Computation

I have told this story many times. Imagine the famous blue marble image as a movie, one spanning all 4.5 billion years of Earth’s development. Watching this movie on super fast-forward, one would see the planet turn from red to blue and green, see continents form and break apart, see the emergence of life and an atmosphere, and, in the last few seconds, see something else that is remarkable. The planet would almost instantaneously grow an external layer of satellites, cities, and various physical networks, all of which constitute a kind of sensory epidermis or exoskeleton. In the last century, Earth has grown this artificial crust, through which it has realized incipient forms of animal–machinic cognition with terraforming-scale agency. This is planetary computation. It is not just a tool, it is a geological cognitive phenomenon.

It is this phenomenon—planetary computation defined in this way—that is Antikythera’s core interest. The term has at least two essential connotations: first, it refers to a global technological apparatus; second, it refers to all the ways that that apparatus reveals planetary conditions in a manner otherwise unthinkable. For the former, computation is an instrumental technology that enables new perceptions of and interactions with the world; for the latter, it is an epistemological technology that shifts fundamental presumptions about what is possible to know about the world at all.

For example, the scientific idea of “climate change” is an epistemological accomplishment of planetary-scale computation. The multiscalar and accelerating rate of change is knowable because of data gleaned from satellites, surface and ocean temperatures, and most of all models derived from supercomputing simulations of the planetary past, present, and futures. As such, computation has made the contemporary notions of the “Planetary” and the “Anthropocene” conceivable, accountable, and actionable. These ideas, in turn, established that over the past centuries, anthropogenic agency has had terraforming-scale effects. Every discipline is reckoning in its own way with the implications of this; some better than others.

As the Planetary is now accepted as a “humanist category,” it is worth emphasizing that the actual planets, including Earth, are rendered as stable objects of knowledge that have been made legible largely through first-order insights gleaned from computational perceptual technologies. It becomes a humanist category both as a motivating idea that puts the assembly of those technologies in motion and, later, as a (precious) second-order abstraction derived from what they show us.

The Planetary is a term with considerable potential philosophical weight but also a lot of gestural emptiness. It is, as suggested, both a cause and an effect of the recognition of “the Anthropocene.” But what is that? I say “recognition” because the Anthropocene was occurring long before it was deduced to be happening. Whether you start at the beginning of agriculture ten thousand years ago or the Industrial Revolution a few hundred years ago, or the pervasive scattering of radioactive elements more recently, the anthropogenic transformation of the planet was an “accidental terraforming.” It was not the plan.

After years of debate as to whether the term deserves the status of proper geologic epoch, the most recent decision is to identify the Anthropocene as an event, as the Great Oxidation is an event or the Chtulam meteor is an event. This introduces more plasticity into the concept. Events are unsettled and transformative but not necessarily final. Anthropogenic agency can and likely will orient this event to a more deliberate conclusion. For its part, computation will surely make this orientation possible, just as it made legible the situation in which it moves.

Computation is Now the Primary Technology for the Artificialization of Functional Intelligence, Symbolic Thought, and Life Itself

Computation is, for us, not only a formal, substrate-agnostic, recursive calculative process; it is also a means of world ordering. From the earliest marks of symbolic notation, computation was a foundation of what would become complex culture. The signifiers on clay in Sumerian cuneiform are known as a first form of writing; in fact, they are indexes of transactions, an inscriptive technique that would become pictograms and over time alphanumeric writing, including base-10 mathematics and formal binary notation. There and then in Mesopotamia, the first writing was “accounting”: a kind of database representing and ordering real-world communication. This artifact of computation prefigures the expressive semiotics, even literary writing, that ensued in the centuries to come.

Over recent centuries, and accelerating during the mid-twentieth century, technologies for the artificialization of computation have become more powerful, more efficient, more microscopic, and more globally pervasive, changing the world in their image. “Artificialization” in this context doesn’t mean fake or unnatural; rather, it means that the intricate complexity of modern computing chips, hardware, and software did not evolve blindly but was the result of deliberate conceptual prefiguration and composition, even if by accident. The evolutionary importance of this general capacity for artificialization will become clearer below.

Planetary Computation Reveals and Constructs the Planetary as a “Humanist Category”

Some of the most essential and timeless philosophical questions revolve around the qualities of perception, representation, and time. Together and separately, these have all been radicalized by planetary computational technologies, in no domain more dramatically than in astronomy.

The Webb deep space telescope scans the depths of the universe, producing data that we make into images showing us, among other wonders, light from a distant star bending all the way around the gravitational cluster of galaxies. From such perceptions we, the little creatures who built this machine, learn a bit more about where, when, and how we are. Computation is not only a topic for philosophy to pass judgment; computation is itself a philosophical technology. It reveals conditions that have made human thought possible.

Antikythera is a philosophy-of-technology program that diverges in vision and purpose from the mainstream of philosophy of technology, particularly from the intransigent tradition growing from the work of Martin Heidegger, whose near mystical suspicion of scientific disenchantment, denigration of technology as that which distances us from Being and reduces the world to numeric profanity, and—most of all—outrage at innovations of perception beyond the comfort of grounded phenomenology has confused generations of young minds. They have been misled. The question concerning technology is not how it alienates us from the misty mystery of Being but how every Copernican turn we have taken—from heliocentrism to Darwinism to neuroscience to machine intelligence—has been possible only by getting outside our own skin to see ourselves as we are and the world as it is. This is closeness to Being.

Computation Reveals the Planetary Condition of Intelligence to Itself

To look up into the night sky with an unaided eye is to gaze into a time machine showing us an incomprehensibly distant past. It is to perceive light emitted before most of us were born and even before modern humans existed at all. It took well into the eighteenth century for science to realize that stripes of geologic sedimentary layers do not just mark an orderly spatial arrangement but represent the depths of planetary time. The same principle (space is time is space) holds as you look out at the stars, but on a vastly larger scale. To calculate those distances in space and time is only possible once their scales are philosophically and mathematically and then technologically abstractable. Such is the case with black holes, first described mathematically and then, in 2018, directly perceived by Earth itself, having been turned into a giant computer vision machine.

Event Horizon Telescope was an array of multiple terrestrial telescopes all aimed at a single point in the night sky. Its respective scans were timed with and by the Earth’s rotation, and thus the planet itself was incorporated into this optical mechanism. Event Horizon connected the views of telescopes on the Earth’s surface into ommatidia of a vast compound eye, a sensory organ that captured fifty-million-year-old light from the center of the M87 galaxy as digital information. This data was then again rendered in the form of a ghostly orange disc that primates, such as ourselves, recognize as an “image.” Upon contemplating it, we can identify our place within the universe, which exceeds our immediate comprehension but not entirely our technologies of representation. With computational technologies such as Event Horizon, it’s possible to imagine our planet not only as a lonely blue spot in the void of space but also as a singular form that finally opens its eye to perceive its distant environment.

For Antikythera, this is what is meant by “computational technology disclosing and accelerating planetary intelligence.” Feats such as this demonstrate what planetary computation is for.

Research Program

Having hopefully drawn a compelling image of the purpose of Antikythera as a generative theoretical project, I will put this image in motion and describe how the program does its work. As you might expect, it is not in the usual way and it is deliberately tuned for the messy process of concept generation, articulation, prototyping, narrativization, and, ultimately, convergence.

The Link Between Philosophy and engineering is a more fertile ground than that between the humanities and design

Antikythera is a philosophy-of-technology research program that uses studio-based speculative design methodologies to provoke, conceive, explore, and experiment with new ideas. At the same time, it is often characterized as a speculative design research program driven by a focused line of inquiry into the philosophy of technology. Yet, neither framing is precisely right.

As I have implied, within the academic subfield of philosophy of technology, Antikythera is positioned opposite the deeply rooted legacy of Heideggerian critique that sees technology as a source of existential estrangement. So perhaps our approach is the opposite of “philosophy of technology”? Technology of philosophy? Maybe. At the same time, despite the long-standing crucial role of thought experiments in advancing technological exploration, the term “speculative design” has unfortunate connotations of whimsical utopian/dystopian pedantic design gestures. While Antikythera is appreciative of the inspiration the humanities provides to design, this must be more than simply injecting the latest cultural-theoretical trend into the portfolios of art students.

A more precise framing may be a renewed conjunction of philosophy and engineering. “Engineering” is often seen as barren terrain for the high-minded abstractions of philosophy, but that’s exactly the problem. Functionalism is not the enemy of creativity but, as a constraint, perhaps its most fertile niche. By this, I don’t mean a philosophy of engineering. Instead, I am referring to a speculative philosophy drawn from a curious, informed, and provocative encounter with the technical means by which anthropogenic agency remakes the world in its image and, in turn, the uncertain subjectivities that emerge, or fail to emerge, from that difficult recognition of its dynamics. Obviously, today those technical means are largely computational; hence, our program for the assembly of a new school of thought for the speculative philosophy of technology focuses its attention specifically on computation.

This may not locate Antikythera in the mainstream of either humanities, philosophy, or science and technology studies, and perhaps rightly so. Instead, it may position the program to accomplish things it otherwise could not. Many sciences began as a subject matter in philosophy: from physics to linguistics, from economics to neuroscience. This is certainly true for computer science, as it congealed from the philosophy of mathematics, logic, astronomy, cybernetics, and more. Of all computational technologies, AI in particular emerged through a nonlinear genealogy of thought experiments spanning centuries before it became anything like the functional technologies realized today, which, in turn, redirected those thought experiments. This is also what is meant by the conjunction of philosophy and engineering.

Furthermore, this suggests that the ongoing “science wars”—which the humanities absolutely did not win—are all the more unfortunate. The orthodoxy project is to debunk, resist, and explain away the ontologically challenging assignments that technoscience puts forth with the comforting languages of social reductionism and cultural determinism. This delays the necessary development of the humanities, a self-banishment to increasingly shrill irrelevance that conceals rather than reveals the extent to which philosophy is where new sciences come from or can be the co-creation of those sciences to come.

It need not be so. There are many ways to reinvent the links between speculative and qualitative reason and functional qualitative creativity. Antikythera’s approach is just one.

Conceive, Convene, Communicate

So what is the method by which we attempt to build this school of thought? The approach is multifaceted but comes down to three things: (1) the tireless development of a synthetic and hopefully precise conceptual vocabulary, posed both as definitional statements and as generative framing questions with which to expand and hone our research; (2) the convening of a select group of active minds intrigued by our provocation, eager to collaborate with those from other disciplines and backgrounds; and (3) investments in the design of the communication of this work, such that each bit adds to an increasingly high-resolution mosaic that constitutes the Antikythera school of thought. The ideas and implications of those outcomes feed back into the conceptual generative framing of the next cycle. Each go-around, the school of thought gets bigger, leaner, and more cutting.

This means a division of labor spread across a growing network. We work with existing institutions in ways that they may not be able to work in on their own. Our affiliate researchers come from Cambridge, MIT, the Santa Fe Institute, Caltech, SCI-Arc, Beijing University, Harvard, UC San Diego, Oxford, Central Saint Martins, UCLA, Yale, Eindhoven, Penn, New York University-Shanghai, Berkeley, Stanford, University of London, and many more. More important than the brand names on their uniforms is their disciplinary range: computer science and philosophy obviously, architecture, digital media, literature, filmmaking, robotics, mathematics, astrophysics, biology, history, and cognitive neuroscience.

At least once a year, Antikythera hosts an interdisciplinary design research studio in which we invite and support fifteen younger and midcareer researchers to work with us on new questions and problems and generate speculative engineering works, world-building cinematic works, and formal papers. We have hosted studios in Los Angeles, Mexico City, London, and Beijing. Studios draw applicants from around the world and various disciplines, from computer scientists to science-fiction authors, mathematicians, game designers, and of course philosophers.

At our Planetary Sapience symposium at MIT Media Lab, we recently announced a collaboration with MIT Press: a book series and peer-reviewed digital journal that will serve as the primary platform for publishing the work of the program as well as intellectually related work from a range of disciplines. The first “issue” of the digital journal will go live in concert with a launch event at the Venice Architecture Biennale next spring. The first title in the book series, What is Intelligence? by Blaise Agüera y Arcas will hit the shelves in fall 2025. The digital journal will publish original and classic texts as imagined and designed by some of the top digital designers working today. It will showcase cutting-edge ideas in both the speculative philosophy of computation and cutting-edge digital design, together establishing a communications platform most appropriate for the ambitions of the work. Each article in the upcoming issue is discussed below in the context of the Antikythera research track to which it most directly contributes.

Antikythera is made possible by the generosity and far-sighted support of the Berggruen Institute, based in Los Angeles, Beijing, and Venice, under the leadership of Nicolas Berggruen, Nathan Gardels, Nils Gilman, and Dawn Nakagawa.

Research Areas

Antikythera’s research is roughly divided into four key tracks, each building off the core theme of planetary computation. They each can be defined in relation to one another, and as the program evolves, new ideas are consolidating as our research into these various areas deepens.

As mentioned, Planetary Computation refers to both the emergence of artificial computational infrastructures at a global scale and the revelation and disclosure of planetary systems as topics of empirical scientific interest, with “the Planetary” as a qualitative conceptual category. This is considered through four non-exclusive and non-exhaustive lenses.

Synthetic Intelligence refers to the emergence of artificial machine intelligence in both anthropomorphic and automorphic forms as well as a complex and evolving distributed system. In contrast with many other approaches to AI, we emphasize ① the importance of productive misalignment and the epistemological and practical necessity of avoiding alignment overfitting to dubiously defined human values and ② the eventual open-world artificial evolution of synthetic hybrids of biological and non-biological intelligences, including infrastructure-scale systems capable of advanced cognition.

Recursive Simulations refers to the process by which computational simulations reflexively or recursively affect the phenomena that they represent. Different forms of predictive processing underpin diverse types of evolved and artificial intelligence. At a collective scale, this enables complex societies to sense, model, and govern their development. In this, simulations become essential epistemological technologies for the understanding of phenomena that are otherwise imperceptible, as simulations compress time.

Hemispherical Stacks examines the implications of multipolar computation and multipolar geopolitics, one in terms of the other. It considers the competitive and cooperative dynamics of computational supply chains and both adversarial and reciprocal relations between states, platforms, and regional bodies. Multiple scenarios are composed about diverse areas of focus, including chip wars, foundational models, data sovereignty, and astropolitics.

Planetary Sapience attempts to locate the artificial evolution of computational intelligence within the longer historical arc of the natural evolution of complex intelligence as a planetary phenomenon. The apparent rift between scientific and cultural cosmologies, between what is known scientifically and cultural worldviews, is posited as an existential problem. This problem cannot be solved by computation as a medium but only by the renewal of a speculative philosophy that addresses life, intelligence, and technology as fundamentally integrated processes. More on this below.

Synthetic Intelligence

The Eventual Implication of the Artificialization of Intelligence is Less Humans Teaching Machines How to Think than Machines Demonstrating that Thinking Exist on a Much Wider and Weirder Spectrum

Synthetic intelligence refers to the wider field of artificially composed intelligent systems that do and do not correspond to humanism’s traditions. These systems, however, can complement and combine with human cognition, intuition, creativity, abstraction, and discovery. Inevitably, both human cognition and the artificialized intelligence are forever altered by such diverse amalgamations.

The history of AI and the history of the philosophy of AI are intertwined, from Leibniz to Turing to Dreyfus to today. Thought experiments drive technologies, which drive a shift in the understanding of what intelligence itself is and might become, one influencing the other. This extends well beyond the European philosophical tradition. In our work, important touchstones include those drawn from Deng-era China’s invocation of cybernetics as the basis of industrial mass mobilization and the Eastern European connotations of AI—which include what Stanisław Lem called an “existential” technology. Many of these touchpoints contrast with Western individualized and individualistic and anthropomorphic models that dominate contemporary debates on so-called AI ethics and safety.

Historically, AI and the philosophy of AI have evolved in a tight coupling, informing and delimiting one another. But as the artificial evolution of AI accelerates, the conceptual vocabulary that has helped bring it about may not be sufficient to articulate what it is and what it might become. Now, as before, not only is AI defined in contrast with the strangely protean image of the human, but the human is defined in contrast with the machine. By habit it is taken almost for granted that we are all that which it is not, and it is all that which we are not. Like two characters sitting across from one another, deciding whether the other is a mirror reflection or a true opposite, each is supposedly the measure and limit of the other.

People see themselves and their society in the reflection AI provides and are thrilled and horrified by what it portends. But this reflection is also preventing people from understanding AI, its potential, and its relationship to human and nonhuman societies. A new framework is needed to grasp the implications.

What is reflected back is not necessarily human-like. The view is beyond anthropomorphic notions of AI and toward a fundamental concern with machine intelligence. What Turing proposed in his famous test as a sufficient condition of intelligence has instead turned into decades of solipsistic demands and misrecognitions. Idealizing what appears and performs as most “human” in AI—either as praise or as criticism—is to willfully constrain the understanding of existing forms of machine intelligence as they are.

Seriously pondering the planetary pasts and futures of AI not only extends but also alters our notions of “artificiality” and “intelligence” and draws from the range of such connotations. However, it will also, inevitably, leave them behind.

The Weirdness Right in Front of Us

This weirdness includes the new unfamiliarity of language itself. If language was, as the structuralist would have it, the house that humans live in, now, as machines spin out coherent ideas at rates just as inhuman as their mathematical answers, the home once provided by language is quite uncanny.

Large language models’ (LLMs’) eerily convincing text prediction/production capabilities have been used to write novels and screenplays and make images, movies, songs, voices, and symphonies. They are even used by some biotech researchers to predict gene sequences for drug discovery—here, at least, the language of genetics really is a language. LLMs also form the basis of generalist models capable of mixing inputs and outputs from one modality to another (e.g., interpreting what is in an image so as to instruct the movement of a robot arm). Such foundational models may become a new kind of general-purpose public utility around which industrial sectors organize: cognitive infrastructures.

Whither speculative philosophy then? As a coauthor and I wrote recently, “reality overstepping the boundaries of comfortable vocabulary is the start, not the end, of the conversation. Instead of groundhog-day debates about whether machines have souls, or can think like people imagine themselves to think, the ongoing double-helix relationship between AI and the philosophy of AI needs to do less projection of its own maxims and instead construct more nuanced vocabularies of analysis, critique, and speculation based on the Weirdness right in front of us.” And that is really the focus of our work: the weirdness right in front of us and the clumsiness of our languages in engaging with it.

Asking if AI can genuinely 'think' according to the standards set forth by Kant in Critique of Pure Reason is like asking if this creature discovered in the New World is actually an 'animal' as defined by Aristotle. It’s obvious the real question is how the new evidence must update the category, not how the received category can judge reality.

The fire apes figured out how to make the rocks think

To zoom out and try to locate such developments in the longer arc of the evolution of intelligence, what has been recently accomplished is truly mind bending. One way to think about it, going back to our blue marble movie mentioned above, is that we’ve had many millions of years of animal intelligence, which became Homo sapiens’s intelligence. We’ve had many millions of years of vegetal intelligence. And now we have mineral intelligence. The fire apes (that’s us) have managed to fold little bits of rocks and metal into particularly intricate shapes and run electricity through them, and now the lithosphere is able to perform feats that until very recently only primates had been able to perform. This is big news. The substrate of complex intelligence, us, now includes both the biosphere and the lithosphere. And it’s not a zero-sum situation. We are beginning to be able to ask how these integrate in such a way that they become mutually reinforcing, and not mutually antagonistic.

Alignment of AI with Human Wants and Needs is a Necessary short-term tactic and an insufficient and even dangerous long-term norm

What does it mean to ask machine intelligence to “align” to human wishes and self-image? Is this a useful tactic for design or a dubious metaphysics that obfuscates how intelligence as a whole might evolve? How should we rethink this framework in both theory and practice?

The emergence of machine intelligence must be steered toward planetary sapience in the service of viable long-term futures. Instead of strong alignment with human values and superficial anthropocentrism, this steerage of AI means treating these humanisms with nuanced suspicion and recognizing AI’s broader potential.

At stake is what AI is and what a society is, and what AI is for. What should align with what?

Hence, this is not only about how AI must evolve to suit the shape of human culture but also about how human societies will evolve in relationship to this fundamental technology. AI overhang—the unused or unrealized capacity of AI that has not yet, if it ever will be, acclimated into sociotechnical norms—affects not only narrow domains but also, arguably, civilizations and how they understand and register their own organization in the past, present, and future. As a macroscopic goal, simple “alignment” of AI to existing human values is inadequate and even dangerous. The history of technology suggests that the positive impacts of AI will not occur through its subordination to or mimicry of human intuition. The telescope did not only magnify what could be seen; it changed how we see and how we see ourselves. Productive disalignment—dragging society toward fundamental insights of AI—is just as essential.

Always remember that everything you do from the moment you wake up to the moment you fall asleep, is training data for the futures model of today.

Cognitive Infrastructures: Open World Evolution

Any point of alignment or misalignment between human and machine intelligence, between evolved and artificial intelligence, will converge at the crucial interfaces between each. Human–computer interaction (HCI) gives way to human–AI interaction design (HAIID), an emerging field that contemplates the evolution of HCI in a world where AI can process complex psychosocial phenomena. The anthropomorphization of AI often leads to weird “folk ontologies” of what AI is and what it wants. Drawing on perspectives from a global span of cultures, mapping the odd and outlier cases of HAIID gives designers a wider view of possible interaction models. But instead of looking at single-user relations with chatbot agents, we turn our attention to the great outdoors and the evolution of synthetic intelligence in the wild.

Natural intelligence evolved in open worlds in the past, and so the presumption is that we should look for ways in which machine intelligence will evolve in the present and future through open worlds as well. This also means that AI’s substrates of intelligence may be quite diverse and don’t necessarily need to be human brain tissue or silicon; they may take many different forms. In other words, instead of starting with the model of AI as a kind of brain in a box, we prefer to start with the question of AI in the wild: something that interacts with the world, in many strange and unpredictable ways.

Natural intelligence also emerges at an environmental scale and in the interactions of multiple agents. It is located not only in brains but also in active landscapes. Similarly, artificial intelligence is not contained within single artificial minds but extends throughout the networks of planetary computation: it is baked into industrial processes, it generates images and text, it coordinates circulation in cities and it senses, models, and acts in the wild.

As artificial intelligence becomes infrastructural, and as societal infrastructures concurrently become more cognitive, the relation between AI theory and practice needs realignment. Across scales—from world datafication and data visualization to users and user interfaces (UIs) and back again—many of the most interesting problems in AI design are still embryonic.

This represents an infrastructuralization of AI but also a “making cognitive” of both new and legacy infrastructures. These are capable of responding to us, to the world, and to each other in ways we recognize as embedded and networked cognition. AI is physicalized, from user interfaces on the surface of handheld devices to deep below the built environment.

Individual users will interact with big models, and multiple combinations of models will interact with groups of people in overlapping configurations. Perhaps the most critical and unfamiliar interactions will unfold between different AIs without human interference. Cognitive infrastructures are forming, framing, and evolving a new ecology of planetary intelligence.

How might this shape HAIID? What happens when the production and curation of data occurs for increasingly generalized, multimodal, and foundational models? How might the collective intelligence of generative AI make the world not only query-able but also re-composable in new ways? How will simulations collapse the distances between the virtual and the real? How will human societies align toward the insights and affordances of artificial intelligence, rather than AI bending to human constructs? Ultimately, how will the inclusion of a fuller range of planetary information, beyond traces of individual human users, expand what counts as intelligence?

Recursive Simulations

Simulation, Computation, and Philosophy

Foundations of Western philosophy are based on a deep suspicion of simulations. In Plato’s allegorical cave, the differentiation between the world and its doubles, its form, and its shadows takes priority in the pursuit of knowledge. Today, however, the real comes to comprehend itself through its doubles: The simulation is the path toward knowledge, not away from it.

From anthropology to zoology, every discipline produces, models, and validates knowledge through simulations. Simulations are technologies to think with, and in this sense they are fundamental epistemological technologies. And yet, they are deeply under-examined; a practice without a theory.

Some computational simulations are designed as immersive virtual environments where experience is artificialized. At the same time, scientific simulations do the opposite of creating deceptive illusions; they are the means by which otherwise inconceivable underlying realities are accessible to thought. From the infinitesimally small in the quantum realm to the inconceivably large in the astro-cosmological realm, computational simulations are not just a tool; they are a technology for knowing what is otherwise unthinkable.

Simulations do more than represent: they are also active and interactive. “Recursive simulations” refers to simulations that depict the world and also act on what they simulate, completing a cybernetic cycle of sensing and governing. They not only represent the world but also organize it in relation to how they summarize and rationalize it. Recursive simulations include everything from financial models to digital twins, user interfaces to prophetic stories. They cannot help but transform the thing they model, which in turn transforms the model and the modeled in an cyclical loop.

The Politics of Simulation and Reality

We live in an era of highly politicized simulations, for good and for ill. The role of climate simulations in planetary governance is only the tip of the proverbial iceberg. Antikythera considers computational simulations experiential, epistemological, scientific, and political forms and develops a framework to understand these in relation to one another.

The politics of simulation, more specifically, is based in recursion: how the model itself affects what it models. This extends from political simulations to logistical simulations to financial simulations to experiential simulations: The model affects the modeled.

Antikythera’s research in this area draws on different forms of simulation and simulation technologies. These include machine sensing technologies (vision, sound, touch, etc.), synthetic experiences (including VR/AR), strategic scenario modeling (gaming, agent-based systems), active simulations of complex architectures (digital twins), and computational simulations of natural systems enabling scientific inquiry and foresight (climate models and cellular/genomic simulations). All of these pose fundamental questions about sensing and sensibility, world-knowing, and worldmaking.

They all have different relations to the real. While scientific simulations provide meaningful correspondence with the natural world and access to ground truths that would be otherwise inconceivable, virtual and augmented reality produce embodied experiences of simulated environments that purposefully take leave of ground truths. These two forms of simulation have inverse epistemological implications: one makes an otherwise inaccessible reality perceivable, while the other bends reality to suit what one wants to see. In between is where we live.

Existential Implications of the Simulations of the Future

Recursion can be direct or indirect. It can be a literal sensing/actuation cycle or the indirect negotiation of interpretation and response. The most nuanced recursions are reflexive. They mobilize action to fulfill or prevent a future that is implied by a simulation. Climate politics exemplifies the reflexivity of recursive simulations: Through planetary computation, climate science produces simulations of near-term planetary futures, the implications of which may be devastating. In turn, climate politics attempts to build planetary politics and planetary technologies in response to those implications, thereby assigning extraordinary political agency to computational simulations. The future not only depends on them—it is defined by them.

The scientific concept of climate change is an epistemological accomplishment of planetary-scale computation.

Scientific simulation, however, not only has deep epistemological value but also makes possible the most profound existential reckonings. Climate science is born of the era of planetary computation. Without the planetary sensing mechanisms, satellites, surface and air sensors, and ice-core samples, all aggregated into models, and—most importantly—the supercomputing simulations of climate past, present, and future, the scientific image of climate change as we know it cannot happen and could not have happened. The idea of the Anthropocene, and all that it means for how humans understand their agency, is an indirect accomplishment of computational simulations of planetary systems over time.

In turn, the relay from the idea of the Anthropocene to climate politics is based on the geopolitics of simulation too. The implications of simulations of the year 2050 are dire, and so climate politics seeks to mobilize a planetary politics in reflexive response to those predicted implications. This politics is recursive. Deliberate actions are consciously taken now to prevent the future. This is an extraordinary agency to confer on simulations. It is possible that many climate activists may not feel warmly about this, but climate politics is one of the important ways in which massive computational simulations are driving how human societies understand and organize themselves. It’s why the activists are in the streets to begin with.

Pre-Perception: Simulation as Predictive Epistemology

Quite often, though, the simulation comes first. Its predictive ability may imply that there must be something we should look for because the model suggests it has to be there. Thus, the prediction makes the description possible as much as the other way around. Such is the case with black holes, which were hypothesized and described mathematically long before they were detected, let alone observed. For the design of the black hole in the Nolan brothers’ movie Interstellar, scientific simulation software was used to give form to the mysterious entity based on consultations with Kip Thorne at Caltech and others. The math had described the physics of black holes, and the math was used to create a computational model, which in turn was used to create a dynamic visual simulation of something no one had ever seen.

Of course, a few years later, we did see one. The black hole at the center of the M87 galaxy was “observed” by the Event Horizon Telescope and a team at Harvard that included Sheperd Doeleman, Katie Bouman, and Peter Galison. It turns out we, the humans, were right. Black holes look like what the math says they must look like. The simulation was a way of inferring what must be true—where to look and how to see it. Only then did the terabytes of data from Event Horizon finally discover a picture.

Toy Worlds & Embodied Simulations

Friends from neuroscience (and artificial intelligence) may raise the point that simulation is not only an external technology with which intelligence figures out the world; in addition, simulations are how minds have intelligence at all. The cortical columns of animal brains are constantly predicting what will be next, running through little simulations of the world and the immediate future, resolving them with new inputs, and even competing with each other to organize perception and action.

Many AIs, especially those embodied in the world (such as driverless cars), are trained in toy world simulations, where they can explore more freely, bumping into the walls until they, like us, learn the best ways to perceive, model, and predict the real world.

Simulation as Model / Model as Simulation

Scientific simulations not only do more than deceive us; they are arguably the essential mechanism by which otherwise inconceivable underlying realities are accessible to thought. From the very very small in the quantum realm to the very very large in the astro-cosmological realm, computational simulations are essential as a tool and as a way of thinking with models: a fundament of induction, deduction, and abduction.

At the same time, simulations are based on models of reality. The status of the model has been a preoccupying concern in the philosophy of science, even if simulations as such are more presumed that philosophized. Models are a way of coalescing disparate bits of data into a composite structure whose whole gives shape to its parts, suggesting their interactions and general comparability with other structures. The model is a tool to think with. Its value lies in its descriptive correspondence with reality, but this correspondence is determined by the model’s predictive value. If a scientific simulation can predict a phenomenon, its descriptive quality is implied. A model is also, by definition, a radical reduction in variables, e.g., a map reduces a territory. A geocentric or heliocentric model of the solar system can be constructed using Styrofoam balls. While one is definitely “less wrong” than the other, both are infinitely less complex than what they model.

This is especially important when what is simulated is as complex as the universe itself. Astrophysics is based almost entirely on rigorous computational simulations of phenomena that produce difficult-to-observe data. These data are assembled into computationally expensive models that ultimately provide degrees of confident predictability about the astronomic realities that situate us all. This is what we call “cosmology,” the meta-model of all models of reality, in which humans and other intelligences conceive of their place in space-time. Today, cosmology in the anthropological sense is achieved through cosmology in the computational sense.

Hemispherical Stacks

The Stack: Planetary Computation as Global System

Planetary computation refers to the interlocking cognitive infrastructures that structure knowledge, geopolitics, and ecologies. Its touch extends from the global to the intimate, from the nanoscale to the edge of the atmosphere and back again. It is not a single totality demanding transparency but instead a highly uneven, long-emerging blending of biosphere and technosphere.

As you stare at the glass slab in your hand, you are, as a user, connected to a planetary technology that both evolved and was planned in irregular steps over time, each component making use of others: an accidental, discontiguous megastructure. Instead of a single megamachine, planetary computation can be understood as being composed of modular, interdependent, functionally defined layers, not unlike a network protocol stack. These layers compose The Stack: the Earth layer, Cloud layer, City layer, Address layer, Interface layer, and User layer.

Earthly ecological flows become sites of intensive sensing, quantification, and governance. Cloud computing spurs platform economics and creates virtual geographies in its own image. Cities form vast discontiguous networks as they weave their borders into enclaves or escape routes. Virtual address systems locate billions of entities and events onto unfamiliar maps. Interfaces present vibrant augmentations of reality, standing in for extended cognition. Users, both human and nonhuman, populate this tangled apparatus. Every time you click on an icon, you send a relay all the way down the paths of connection and back again, activating (and being activated by) the entire planetary infrastructure hundreds of times a day.

The Emergence of Multipolar Geopolitics through Multipolar Computation

The emergence of planetary computation in the late twentieth century shifted not only the lines on the map but also the maps themselves. It distorted and reformed Westphalian political geography and created new territories in its own image. Large cloud platforms took on roles traditionally assumed by nation-states (identity, maps, commerce, etc.). At the same time, nation-states increasingly evolved into large cloud platforms (state services, surveillance, smart cities, etc.). In the last few decades, the division of the Earth into jurisdictions defined by land and sea has given way to a more irregular, unstable, and contradictory amalgam of overlapping sovereign claims to data, people, processes, and places defined instead by bandwidth, simulation, and hardware and software choke points.

In recent years, these stacks have been decisively fragmenting into multipolar hemispherical stacks defined by geopolitical competition and confrontation. A North Atlantic–Pacific stack based on American platforms has been delinking from a Chinese stack based on Chinese platforms, while India, the Gulf countries, Russia, and Europe have charted courses based on citizenship identification, protection, and information filtering

From the Chip Wars to EU’s AI decrees, this marks a shift toward a more multipolar architecture and hemispheres of influence, and the multipolarization of planetary-scale computation into “hemispherical stacks.” These segment and divide the planet into sovereign computational systems extending from energy and mineral sourcing, intercontinental transmission, and cloud platforms to address systems, interface cultures, and different politics of the “user.”

A New Map

This is both exciting and dangerous. It implies Galapagos effects of regional cultural diversity and artificially encapsulated information cultures. For geotechnology, just as for geopolitics, “digital sovereignty” is an idea beloved by both democracies and authoritarian regimes.

The ascendance of high-end chip manufacturing to the pinnacle of strategic plans—in the US and in the China Strait—is exemplary and corresponds with the removal of Chinese equipment from Western networks, the removal of Western platforms from Chinese mobile phones, and so on. Economies are defined by interoperability and delinking. But the situation extends further up the stack. The militarization of financial networks in the form of sanctions, the data-driven weaponization of populism, and the reformulation of “citizen” as a “private user with personal data” all testify to deeper shifts. In some ways, these parallel historical shift how new technologies alter societal institutions in their image, and yet the near-term and long-term future of planetary computation as a political technology is uncertain. Antikythera seeks to model these futures preemptively, drawing maps of otherwise uncharted waters.

Hemispherical Stacks describes how the shift toward a more multipolar geopolitics over the last five years and the shift toward a more multipolar planetary computation not only track one another but are, in many respects, the same thing.

The AI Stack

It is likely that the last half century during which “the stack” evolved and was composed was really just a warm-up for what is about to come: from computation as planetary infrastructure to computation as planetary cognitive infrastructure; from a largely state-funded “accidental megastructure” to multiple privately funded, overlapping, strategically composed, and discontiguous systems; from the gathering, storage, and sorting of information flows to the training of large and small models and serving generative applications on a niche-by-niche scale; from Von Neumann architectures and procedural programming to neuromorphic systems and the collapse of the user vs. programmer distinction; and from sending light and inexpensive information to information on heavy hardware, to heavy information loads accessed by light hardware. Despite how unprepared mainstream international relations may be for this evolution, this is not science fiction; this is last week.

Chip Race: Adversarial Computational supply-chains

Computation is, in the abstract, a mathematical process, but it is also one that uses physical forces and matter to perform real calculations. Math may not be tangible, but computation as we know it very much is, since electricity moves in tiny pathways on a base made of silicon. It is also worth remembering that the tiny etchings in the smooth surface of a chip, with spaces between measured in nanometers, are put there by a lithographic process. The intricate pathways through which a charge moves to compute are, in a way, a very special kind of image.

The machines that make the machines are the precarious perch on which less than a dozen companies hold together the self-replication of planetary computation. The next decade is dedicated to the replication of this replication supply chain itself: the race to build better stacks. If society runs on computation, the ability to make computational hardware is the ability to make a society. This means that the ability to design and manufacture cutting-edge chips, shrinking every year toward perhaps the absolute physical limits of manipulable scale, is now a matter of national security.

Chips are emblematic of all the ways that computational supply chains have shifted and consolidated the axes of power around which economies rotate. One Antikythera project, “Cloud Megaregionalization,” observes that a new kind of regional planning has emerged—from Arizona to Malaysia to Bangalore—that concentrates cloud manufacturing in strategic locations that balance many factors: access to international trade, energy and water sourcing, access to educated labor, and physical security. These are the new criteria for how and where to build the cloud. Ultimately, the chip race is a race to build not just chips but also the urban regions that build the chips.

Astropolitics: Extraplanetary Sensing and Computation

Another closely related race is the reemergence of outer “space” as a contested zone of exploration and intrigue, from satellites to the moon and Mars and back again. It is being driven by advances in planetary computation, which in turn drive those advances, spreading them beyond terrestrial grounding.

Planetary computation becomes extraplanetary computation. If geopolitics is now driven by the governance of model simulations, then the seat of power is the view from anywhere. That is, if geopolitics is defined by the organization of terrestrial states, astropolitics is and will be defined by the organization of Earth’s atmosphere, its orbital layers, and what lies just beyond. The high ground is now beyond the Kármán Line, the territory dotted with satellites looking inward and outward.

In the 1960s, much was made of how basic research for the space race benefited everyday technologies. Today, however, this economy of influence is reversed. Many of the technologies that are making the new space race possible—machine vision and sensing, chip miniaturization, information relays, and other standards—were first developed for consumer products. As planetary computation matured, the space race turned inward toward miniaturization, and today the benefits of these move outward again.

The domain of space law, once obscure, will come to define international law in the next decades, as it is the primary body of law that takes an entire astronomic body as its jurisdiction, of which Earth and all those things in its orbit are also prime examples.

What do we learn from this? How is this an existential technology? There is no planetarity without extraplanetarity: To truly grasp the figure of our astronomic perch is a Copernican trauma by which the ground is not “grounding” but a gravitational plane, and the night sky is not the heavens but a time machine showing us light from years before the evolution of humans. For this, the archaic distinction between “down here” and “up there” also fractures.

The Technical Milieu of Governance

The apparent technologically determined qualities of these tectonic shifts may undermine some mainstream political theory’s epistemological habits: social reductionism and cultural determinism. While the fault lines by which hemispheres split trace the boundaries of much older socioeconomic geographic blocs, each bloc is increasingly built on a similar platform structure. This puts them in direct competition for the ability to build the more advanced computational stack, thereby building the more advanced economy and through this compose society.

The “political” and “governance” are not the same thing. Both always exist in some technical milieu that causes and limits their development. If the “political” refers to how the symbols of power are agonistically contested, then governance (inclusive of the more cybernetic sense of the term) refers to how any complex system (including a society) is able to sense, model, and recursively act back on itself. Some of the confusion in forms of political analysis born of a pre-computational social substrate seems to result from the closely held axiom that planetary computation is something to be governed by political institutions from an external and supervisory position. The reality is that planetary computation is governance in its most direct, imminent sense: it is what senses, models, and governs and what, at present, is reforming geopolitical regimes in its image.

Beyond Cultural Determinism

Not surprisingly, cultural determinism enjoys an even deeper commitment in the humanities, and there, even when planetary computation is recognized as fundamental, the sovereignty of cultural difference is defended less as a cause of computation’s global emergence (as it may be for political science) and more as a remedy for its emergence. Gestures toward pluralism, posited as both a means and end, confront the global isomorphic qualities of planetary computation, not as the expression of artificial convergent evolution but as the expressive domination of a particular culture. To contest this domination is thus to contest the expression. In its most extreme forms, pluralism is framed as a clash of reified civilizations, each possessing essential qualities and “ways of being technological”—one Western, one Chinese, etc. Beyond the gross anthropological reduction, this approach evades the real project for the humanities: not how culture can assert itself against global technology, but how planetary computation is the material basis of not only new “societies” and “economies” but also different relations between human populations bound to planetary conditions.

As I put it in the original “Hemispherical Stacks” essay:

"Despite the integrity of mutual integration, planetarity cannot be imagined in opposition to plurality, especially as the latter term is now over-associated with the local, the vernacular, and with unique experiences of historical past(s). That is, while we may look back on separate pasts that may also set our relations, we will inhabit conjoined futures. That binding includes a universal history, but not one formulated by the local idioms of Europe, or China, or America, or Russia, nor by a viewpoint collage of reified traditions and perspectives, but by the difficult coordination of a common planetary interior."

It is not that planetary-scale computation brought the disappearance of the outside; it helped reveal that there never was an outside to begin with.

Planetary Sapience

What is the relationship between the planetary and intelligence? What must it be now and in the future?

These questions are equally philosophical and technological. The relationship is one of disclosure: Over millions of years, intelligence has emerged from a planetary condition which, quite recently, has been disclosed to that intelligence through technological perception. The relationship is also one of composition: For the present and the future, how can complex intelligence—both evolved and artificialized—conceive a more viable long-term coupling of biosphere and technosphere?

Over billions of years, Earth has folded its matter to produce biological and non-biological creatures capable of not only craft and cunning but also feats of artistic and scientific abstraction. Machines now behave and communicate intelligently in ways once reserved for precocious primates. Intelligence is planetary in both origin and scope. It emerges from the evolution of complex life, a stable biosphere, and intricate perceptual-cognitive organs. Both contingent and convergent, intelligence has taken many forms, passing though forking stages of embodiment, informational complexity, and eventually even (partial) self-awareness.

Planetary Computation and Sapience

Planetary-scale computation has enabled the sensing and modeling of climate change and thus informed the conception of an Anthropocene and all its existential reckonings. Among the many lessons for philosophy of technology is that, in profound ways. Humans (and the species and systems that they cultivated and were cultivated by) terraformed the planet in the image of their industry for centuries before truly comprehending the scale of these effects. Planetary systems, both large and small and inclusive of human societies and technologies, have evolved the capacity to self-monitor, self-model, and—hopefully deliberately—self-correct. Through these artificial organs for monitoring its own dynamic processes, the planetary structure of intelligence scales and complexifies. Sentience becomes sapience: sensory awareness becomes judgment and governance.

Modes of Intelligence

The provocation of planetary sapience is not grounded in an anthropomorphic vision of Earth constituted by a single “noosphere.” Modes of intelligence are identified as different types at multiple scales, some ancient and some very new. These include:

- mapping the extension and externalization of sensory perception; redefining computer science as an epistemological discipline based not only on algorithmic operations but also on computational planetary systems;

- comparing stochastic predictive processing in both neural networks and artificial intelligence; embracing the deep time of the planetary past and future as a foundation for a less anthropomorphic view of history;

- modeling life by the transduction of energy and/or the transmission of information, exploring substrate dependence or independence of general intelligence;

- embracing astronautics and space exploration as techno-philosophical pursuits that define the limit of humanity’s tethering to Earth and extend beyond it;

- exploring how astroimaging—such as Earth seen from space and distant cosmic events seen by Earth—has contributed to the planetary as a model orientation;

- theorizing simulations as epistemological technologies that allow for prediction, speculation, and ultimately a synthetic phenomenology;

- measuring evolutionary time in the complexity of the material objects that surround us and constitute us;

- recomposing definitions of “life,” of “intelligence,” and of “technology” in light of what is revealed by the increasing artificialization and recombination of each.

These modes of intelligence together lead us to construct a technological philosophy that might synthesize them into one path toward greater planetary sapience, creating the capacity for complex intelligence to make its own future coherent.

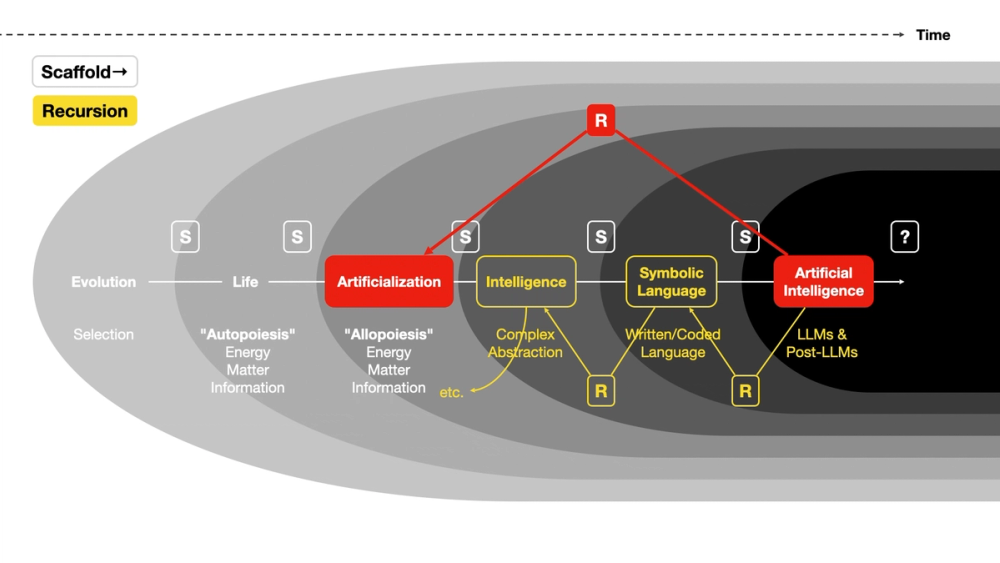

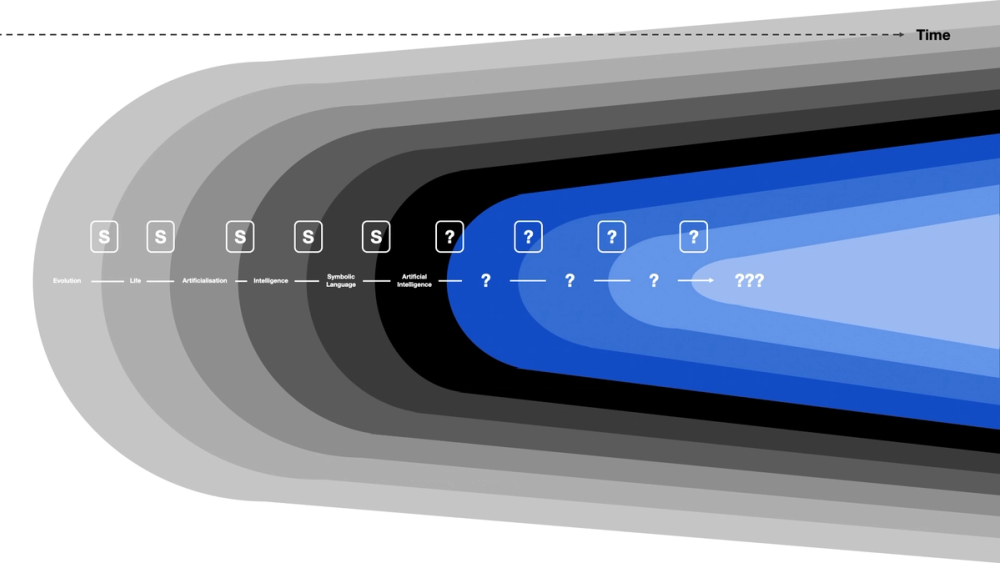

The Evolution of Artificialization, Intelligence and the Artificialized Intelligence

To properly answer the questions posed we need to locate the emergence of artificial computational intelligence within the longer arc of the evolution of intelligence as such, as well as its relationship to artificialization as such. The two have, I argue, always been intertwined, as they are now and as they will be for the foreseeable and unforeseeable futures.

Our thinking on this is influenced by Sara Walker and Lee Cronin’s provocative assembly theory, which posits that evolutionary natural selection begins not with biology but (at least) with chemistry. The space of possible molecules is radically constrained and filtered through selection toward those most stable and most conducive to becoming scaffolding components for more complex forms. Those forms that are able to internalize and process energy, information, and matter with regular efficiency can autopoietically replicate themselves to become what we can call “life” (also by Agüera y Arcas’s computational definition). The process is best defined not by how a single organism or entity internalizes the environment to replicate itself (as a cell does) but by how a given population distributed within an environment evolves through the cooperative capacity to increase energy, matter, and information capture for collective replication. I invite the reader to look around and consider all the things on which they depend to survive the day.

For autopoietic forms to succeed in folding more of the environment into themselves to replicate, evolution arguably selects for their capacity to transform their niche in ways that make it more suitable for this process. For example, by reducing the amount of energy expenditure necessary for energy capture, a given population is able to accelerate and expand its size, complexity, and robustness. More of the world is transformed into that population because it is capable of allopoiesis, the process of transforming the world into systems that are external to the agent itself. That is, evolution seems to select for forms of life capable of artificialization. Perhaps those species most capable of artificialization are the greatest beneficiary of this tendency.

Complexity begets complexity. Simple allopoiesis and environmental artificialization may be an all but autonomic process, but greater cooperation between agents allows for more complex, efficient, and impactful forms of artificialization. Here, selection pressure enables the evolution of more nuanced forms of individual and collective intelligence as well as more powerful forms of technology. We might define “technology” very generally as a durable scaffolding apparatus that is leveraged by intelligent agents to transform and internalize matter, energy, or information at scales and with a regularity and precision that would otherwise be impossible. In this regard, “technology” occupies a parallel symbiotic evolutionary track, one that determines and is determined by the ongoing evolution of intelligent life. What emerges are technologically enabled conceptual abstractions about how the world is and, perhaps more importantly, counterfactual models about how it might be otherwise. For a form of autopoietic life (including humans) to become really good at intelligence, it needs to instantiate counterfactual models and communicate them. This requires something like formally coded symbolic language, which eventually evolved part and parcel with all the preceding biosocial and sociotechnical scaffolds.

Simple evolutionary processes are what enable autopoietic forms to emerge, which become scaffolds for yet more complex forms, which become scaffolds for yet more complex forms capable of allopoietic accomplishments, which become scaffolds for complex intelligence and technologies, which in turn become scaffolds for durable cultural and scientific abstractions as mediated by symbolic language and inscription. The accumulation and transgenerational transmission of conceptual and technical abstractions through linguistic notation in turn amplifies not only the aggregate intelligence of the allopoeitically sophisticated population but also its real capacity for transforming its world for autopoietic replication. Language began a great acceleration; another threshold was passed with symbolic forms, another with coded notation, another with the mechanical capture of condensed energy, and another with the artificialization of computation.

The earliest forms of artificialization were driven by primordial forms of intelligence and vice versa. Each evolved in relation to other to such a degree that from certain perspectives they could be seen as the same planetary phenomena: autopoietic matter capable of allopoiesis (and technology), because it is intelligent enough and capable of devoting energy to intelligence, because it is allopoietic. Regardless, intelligence is at least a driving cause of the technological and planetary complexification of artificialization as an effective process. The question that demands to be asked is then, “What happens when intelligence, the driving force of artificialization for millions of years, is itself artificialized?” What is foreseeable through the artificialization of artificialization itself?

It is perhaps not altogether surprising that language would be (for now) the primary scaffold upon which artificialized intelligence is built. It is also assured that the artificialization of language will recursively transform the scaffold of language on which it depends, as much as the emergence of coded language affected social intelligence, the scaffold on which it depends, and intelligence affects allopoietic artificialization, the scaffold on which it depends, and so on. Ultimately, the long arc of bidirectional recursion may suggest that the emergence of increasingly complex artificialized intelligence will affect the direction of life itself, how it replicates and how it directs and is directed by evolutionary selection.

The most pressing question now is:

“For what is AI a scaffold? What comes next?”

There is no reason to believe that this is the last scaffold, that history has ended, that evolution has reached anything but another phase in an ongoing transition in which each of our lives takes momentary shape. Because this isn’t ending: AI is not the last thing, just as intelligence was a scaffold for symbolic language, which was a scaffold for AI. AI is a scaffold for something else, which is a scaffold for something else, which is a scaffold for something else, and so on. We’re building a scaffold for something unforeseeable. What we today call “AI” replicates both autopoietically and allopoietically: it is “life” if not also alive. It would be an enormous conceptual and practical mistake to believe that it is merely a “tool,” which implies that it is separate from and subordinate to human tactical gestures, that it is an inert lump of equipment to be deployed in the service of clear compositional intention, and that it has no meaningful agency beyond that which it is asked to have on our provisional behalf.

It is rather, like all the various things that make humans human and life life, a complex form that emerges from the scaffolds of the planetary processes that preceded it, and it is a scaffold for another thing yet to come, and on and on.